Infographic Making the Most of Databricks

10 tips to help you maximize the value of your investment

By Insight Editor / 13 Jul 2021 / Topics: Data and AI

By Insight Editor / 13 Jul 2021 / Topics: Data and AI

Databricks provides a powerful, unified platform for managing your data architecture — enabling data scientists, analysts and engineers to seamlessly collaborate and deliver business intelligence and machine learning solutions.

But with so many capabilities and features, how can you ensure you’re making the most of your investment in this toolset? Use the questions and expert tips in this checklist to find out.

Accessibility note: The listical is transcribed below the graphic.

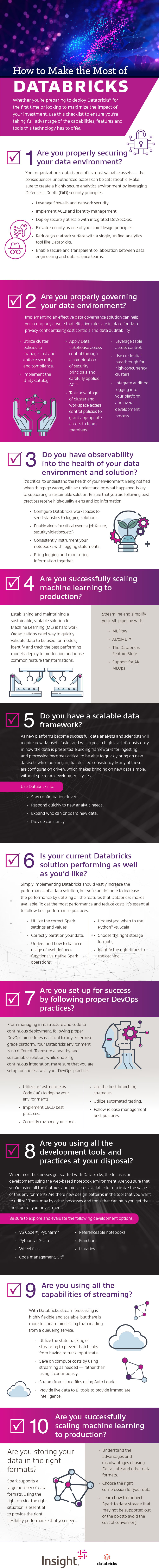

Whether you’re preparing to deploy Databricks for the first time or looking to maximize the impact of your investment, use this checklist to ensure you’re taking full advantage of the capabilities, features and tools this technology has to offer.

Your organization’s data is one of its most valuable assets — the consequences unauthorized access can be catastrophic. Make sure to create a highly secure analytics environment by leveraging Defense-in-Depth (DiD) security principles.

Implementing an effective data governance solution can help your company ensure that effective rules are in place for data privacy, confidentiality, cost controls and data auditability.

It’s critical to understand the health of your environment. Being notified when things go wrong, with an understanding what happened, is key to supporting a sustainable solution. Ensure that you are following best practices receive high-quality alerts and log information.

Establishing and maintaining a sustainable, scalable solution for Machine Learning (ML) is hard work. Organizations need way to quickly validate data to be used for models, identify and track the best performing models, deploy to production and reuse common feature transformations.

Streamline and simplify your ML pipeline with:

As new platforms become successful, data analysts and scientists will require new datasets faster and will expect a high level of consistency in how the data is presented. Building frameworks for ingesting and processing becomes critical to be able to quickly bring on new datasets while building in that desired consistency. Many of these are configuration driven, which makes bringing on new data simple, without spending development cycles.

Use Databricks to:

Simply implementing Databricks should vastly increase the performance of a data solution, but you can do more to increase the performance by utilizing all the features that Databricks makes available. To get the most performance and reduce costs, it’s essential to follow best performance practices.

From managing infrastructure and code to continuous deployment, following proper DevOps procedures is critical to any enterprise-grade platform. Your Databricks environment is no different. To ensure a healthy and sustainable solution, while enabling continuous integration, make sure that you are setup for success with your DevOps practices.

When most businesses get started with Databricks, the focus is on development using the web-based notebook environment. Are you sure that you’re using all the features and processes available to maximize the value of this environment? Are there new design patterns in the tool that you want to utilize? There may by other processes and tools that can help you get the most out of your investment.

Be sure to explore and evaluate the following development options:

With Databricks, stream processing is highly flexible and scalable, but there is more to stream processing than reading from a queueing service.

Spark supports a large number of data formats. Using the right one for the right situation is essential to provide the right flexibility performance that you need.

Discover reports, stories and industry trends to help you innovate for the future.